ACCESSIBLE INTERACTIVE 3D MODELS FOR BLIND AND LOW-VISION PEOPLE

Samuel Reinders, Department of Human-Centred Computing, Monash University, Melbourne, Victoria Australia samuel.reinders@monash.eduAbstract

Blind and low-vision (BLV) people experience difficulty accessing graphical information, particularly regarding travel and education. Tactile diagrams and 3D printed models can improve access to graphical information for BLV people; however, these formats only allow limited detailed and contextual information. Interactive 3D printed models (I3Ms) exist, but many rely on passive audio labels that don’t particularly empower BLV people in independent knowledge building and interpretation. This project investigates the creation of I3Ms that offer more engaging experiences with a focus on facilitating independent exploration and knowledge discovery. Specifically, this project explores how BLV people want to interact with I3Ms, interactive functionalities and behaviours that I3Ms should support, such as conversational interfaces and model agency, and to understand the relationship between I3Ms and conventional accessible graphics.

Introduction & Background

Accessing graphical information can be difficult for blind and low-vision (BLV) people. Research reveals that limited access to and difficulty interpreting this information can impact education opportunities [6] and make independent travel difficult [20], leading to reductions in confidence and quality of life [13].

Tactile graphics provide BLV people with representations of the types of graphical information used in orientation and mobility (O&M) training and educational curriculums. Common production methods share limitations restricting their usefulness, including not being able to appropriately convey height or depth [11]. Additionally, tactile graphics are often paired with braille labels, the complexity of which is limited due to the space that braille requires [5, 11, 22], the need for braille keys if labels are too long, and the fact that braille is inaccessible to a large portion of BLV people [17].

3D printing offers an alternative and allows content to be produced that is three-dimensional in nature, such as maps and many concepts taught in STEM - e.g. biology, astronomy and chemistry. 3D printed models have been used to aid accessible classroom curriculum's [12, 15], and commodity printing allows models to be produced at comparable effort and price to tactile graphics. While these models can contain braille labels, this is problematic because of the difficulty of 3D printing braille on a model. For this reason, many researchers have investigated creating interactive 3D printed models (I3Ms) that include audio labels [9-11, 14, 18, 21], but these contain limited detailed and contextual information.

I3Ms that include a richer level of interactive functionality may help overcome this limitation and enable more engaging experiences, empowering BLV people as they use I3Ms in school environments to broaden their knowledge or during independent navigation. One such possibility may be for I3Ms to include interfaces similar to conversational agents, allowing users to ask questions using natural language. Conversational interfaces are increasingly used in daily life and include Siri, Alexa and Google Assistant. With research finding that BLV people find voice interaction convenient [2], it is no surprise that adoption rates of devices offering conversational agents, most of which support voice and text input, is high amongst BLV people. Minimal research exists applying conversational interfaces within the context of accessibility and I3Ms, with one I3M offering limited interactions focusing on indoor navigation [7] rather than education.

With many of the interactions supported by conversational interfaces being passive in nature, requiring the end-user to activate the agent and request a task to be performed, there exists opportunity to expand on the level of interactivity that interfaces offer. Advances in "intelligent" agents mean that a device may be able to take on a more proactive role, exhibiting a level of model agency capable of helping guide the user, or an embodied personality. Such agents have been studied in health care contexts [3, 23].

An I3M with these characteristics could facilitate deeper engagement with the model and its wider context, similar to how pedagogic agents can assist learning experiences by emulating human-human social interaction [16]]. Recent research into the design of conversational interfaces and subsequent use by BLV people have found that their design assumes a human-human conversation model of interaction [4]. It has been suggested this might impact their accessibility as the extraneous and slow output forces BLV people, many of whom with familiarity with screen reader software have increased listening rates, to interact at a slower pace [1]. However, one study comparing conversational interfaces speaking at different speeds found that BLV users expressed higher satisfaction with agents communicating using an average spoken speed, appearing more "human", and that faster speech rates, equivalent to screen reader software, were more machine-like [8].

An opportunity exists to explore how BLV people would like to interact with conversational interfaces embedded in tangible objects such as I3Ms, particularly whether the physical embodiment of the object or agent itself impacts experiences. My work aims to further the design space of how I3Ms can assist BLV people in accessing graphical information by addressing these gaps and exploring the following research questions:

- How do BLV users want to use and communicate with accessible interactive graphics that have conversational interfaces? Like tool-like functional machines or social machines?

- Does having a tangible object (the model), level of agency, or embodied personality impact levels of engagement and trust with accessible interactive graphics that have conversational interfaces?

- In what circumstances do I3Ms support or replace the need for current accessible graphics?

Solution & Methodology

This research, wherein a series of accessible I3Ms will be created, is guided by a Design Science methodology. This began with an exploratory study. This initial exploration, conducted with BLV participants, focused on uncovering interaction techniques BLV people would like to use when exploring I3Ms, the types of interactive functionalities and behaviours they should support, as well as attitudes towards different levels of model agency. This exploration made use of Wizard-of-Oz (WoZ) prototyping. After exploration, design iterations occurred incorporating functionality into a prototype I3M informed by earlier findings including touch gesture triggered audio labels and a WoZ text-to-speech powered conversational interface. A follow-up study was run to validate the WoZ findings with the prototype I3M.

Further prototyping and user studies are now being designed focusing on aspects of functionality relating to the use of conversational interfaces. Studies exploring how BLV people want to communicate with conversational agents embedded into I3M’s, and whether the embodiment of such interfaces has effects on a user’s experience, level of trust and engagement will soon be undertaken. The findings of these will further inform prototyping activities which will culminate in a study exploring the role of I3Ms and their relationship to existing accessible graphics such as tactile diagrams and non-interactive 3D printed models.

Current Progress

User Study 1 – Initial Exploration

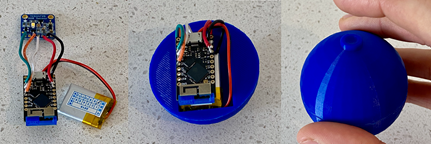

A WoZ exploratory study was conducted to uncover ways in which BLV people would like to naturally interact with and explore I3Ms, as well as attitudes towards different levels of model agency [19]. Eight BLV participants interacted with non-interactive 3D printed models (Figure 1) in ways that they felt natural. Participants were told that the models were ‘magical’ and that another researcher, present in the room, would act on behalf of the models to fulfil interactions using a WoZ method. Each model given to a participant had a different level of agency, ranging from passive models that only responded when a participant initiated a deliberate interaction (low-agency), to smarter models capable of introducing themselves and providing assistance if difficulty was encountered (high-agency). Key findings included:

- Participants expected models to provide verbal or haptic output. The greatest reliance was on touch, with information that was of a more defined nature (e.g. what an element is, or a basic trivial fact) typically obtained through a gesture (such as double tap).

- More complex tended to be obtained through questions directly aimed at the model, treating them as conversational agents similar to Siri, Alexa and Google Assistant. The expression ‘Mr Model’ was used by one participant to initiate dialogue, consistent with conversational agents.

- Participants strongly desired independence of control and independence of interpretation while interacting with the models, preferring a low-agency model. Participants sought to discover information through tactual means and deliberate triggering of information held by the model, rather than simply asking it to answer every question.

- The prior experience of participants appeared to influence their choice of interactions, in particular their tactile information gathering knowledge, and previous experiences with technologies such as smartphones influencing use of touch gestures and conversational agent conventions to interact.

User Study 2 – Validation

User Study 2 was designed to incorporate and validate the findings of User Study 1. This involved 6 returning participants. A fully functional interactive model representing the Solar System was designed (Figure 2) including interaction functionalities and behaviours identified in User Study 1, allowing them to pick up individual planets, to single and double tap them to extract audio descriptions, and to ask questions using a conversational interface driven through WoZ text-to-speech. The types of questions that were able to be asked using this method were not constrained. The prototype was designed using a Raspberry Pi and a touch breakout add-on board, with capacitive touch points wired to each planet. Participants were asked to interact with and complete a series of activities with the I3M prototype model designed around independent exploration, knowledge discovery and extraction, and the agency of the model. Study 1/2 were published at CHI ’20 [19], with key findings of the latter including:

- Study 2 confirmed earlier findings relating to methods of interaction. Participants used simple touch interaction, including gestures to extract audio descriptions, in addition to asking the model questions similar to Siri, Alexa and Google Assistant when unable to extract required information by any other means. This developed into a clear hierarchy of interaction:

- Tactile Exploration - Seen by participants as supporting the greatest level of independence and also likely the most familiar information gathering technique.

- Gesture-Driven Enquiry - Extraction of information through touch gestures, used to retrieve low-level ‘factual’ information not possible to obtain via touch exploration.

- Natural Language Interrogation - While comfortable with asking the model questions, conversation was used largely to fill gaps of knowledge not obtainable through touch or gestural means.

- Study 2 further confirmed the desire for participants to be in control of their experience and to assemble their own understandings and interpretation, aligning with preferences of low-agency models in Study 1. Although participants did find the level of intervening assistance provided during incorrect component placement useful.

- Participants were generally comfortable asking the models questions, with preference towards the expression “Hey Model!” to initiate dialogue.

Next Steps

Conversational Interfaces

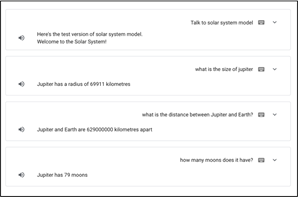

In order to further explore the role of conversational agent interfaces and how they can be integrated into accessible I3Ms, prototyping activities began creating a conversational interface. Built using the Google Assistant SDK and Dialogfow, two conversational agents have been produced. Each of these conversational agents has been built to facilitate interactions with a unique prototype I3M – models representing the Solar System (Figure 3) and a world map. Participants can perform touch gestures on predefined hot spots (e.g. Planet Earth for the former and the Pacific Ocean for the latter) to extract low-level factual information that is stored in the conversational interface and can also use natural language to interrogate each I3M by asking questions to fill gaps of knowledge (Figure 4). Additionally, the interface supports customisable speech rates (adjustable to screen reader rate and that of a standard virtual assistant), verbosity rate, and work is currently being undertaken allowing the interface to respond to and initiate small talk during interactions and showcase a rudimentary level of personality.

Study 3 is currently being designed to explore the role of conversational interfaces embedded in I3Ms, particularly how BLV people choose to interact and communicate with them. Focus is being placed on uncovering factors which determine whether users choose to communicate using human-human or more machine-like conversation, and whether the physical tangible object that an I3M represents, or associated level of embodied personality, influences interactions in terms of trust and engagement.

Study 3 will also include a new user group – sighted participants – to explore whether these types of models are of interest and can be used in mainstream contexts in efforts destigmatise what many BLV people feel as a result of using “unconventional” aids/tools.

The Use & Role of I3Ms

The role of I3Ms and their relationship to existing accessible graphics will be explored. Rather than the use of accessible graphics being viewed as mutually exclusive, situations and contexts should be identified in which the use of I3Ms complement tactile diagrams and 3D printed models with no electronics, and those situations where I3Ms replace the need for conventional accessible graphics. A series of qualitative and quantitative tasks will be performed by participants to benchmark preference and performance.

The different environments where the use of I3Ms may prove useful, both involving BLV and sighted people, is to also be explored. In addition to the previously mentioned scenarios such as school environments and aiding independent navigation, I3Ms may have direct utility in many public spaces such as museums/exhibits, art galleries, shopping centres and other public venues.

Contributions

The findings of this project stand to contribute to knowledge bases spanning multiple audiences. Major contributions of this work include:

- A hierarchy of interaction describing a clear interaction strategy employed by BLV people when independently engaging with I3Ms that span multiple modalities, proving use to accessibility researchers and the HCI community more broadly.

- More complex tended to be obtained through questions directly aimed at the model, treating them as conversational agents similar to Siri, Alexa and Google Assistant. The expression ‘Mr Model’ was used by one participant to initiate dialogue, consistent with conversational agents.

- The integration of conversational interfaces into I3Ms, in particular the exploration of levels of model agency, assistive behaviours, and agent embodiment will prove useful to HCI and HRI communities. Additionally, attitudes of BLV people towards these aspects with respect to their sense of independence and ability to discover knowledge will assist accessibility researchers.

- A series of design guidelines will assist further in knowledge building and the capability of accessible graphic producers and accessibility researchers to produce I3Ms.

Acknowledgments

This research is supported by an Australian Government Research Training Program Scholarship.

References

- Ali Abdolrahmani, Ravi Kuber, and Stacy M. Branham. 2018. "Siri Talks at You": An Empirical Investigation of Voice-Activated Personal Assistant (VAPA) Usage by Individuals Who Are Blind. ASSETS ’18, 249-258.

- Shiri Azenkot and Nicole B. Lee. 2013. Exploring the Use of Speech Input by Blind People on Mobile Devices. ASSETS ’13, 1-8.

- Timothy W. Bickmore, Daniel Schulman, and Candace Sidner. 2013. Automated interventions for multiple health behaviors using conversational agents. Patient Education and Counseling 92, 2 (2013), 142-148.

- Stacy M. Branham and Antony Rishin Mukkath Roy. 2019. Reading Between the Guidelines: How Commercial Voice Assistant Guidelines Hinder Accessibility for Blind Users. ASSETS ’19, 446-458.

- Anke Brock, Philippe Truillet, Bernard Oriola, Delphine Picard, and Christophe Joufrais. 2012. Design and User Satisfaction of Interactive Maps for Visually Impaired People. ICCHP ‘12, 544-551.

- Matthew Butler, Leona Holloway, Kim Marriott, and Cagatay Goncu. 2017. Understanding the graphical challenges faced by vision-impaired students in Australian universities. Higher Education Research & Development 36, 1 (2017), 59-72.

- Luis Cavazos Quero, Jorge Iranzo Bartolomé, Dongmyeong Lee, Yerin Lee, Sangwon Lee, and Jundong Cho. 2019. Jido: A Conversational Tactile Map for Blind People. ASSETS ’19, 682-684.

- Dasom Choi, Daehyun Kwak, Minji Cho, and Sangsu Lee. 2020. "Nobody Speaks That Fast!" An Empirical Study of Speech Rate in Conversational Agents for People with Vision Impairments. CHI ’20, 1-13.

- Stéphanie Giraud, Anke M. Brock, Marc J.-M. Macé, and Christophe Joufrais. 2017. Map Learning with a 3D Printed Interactive Small-Scale Model: Improvement of Space and Text Memorization in Visually Impaired Students. Frontiers in Psychology 8 (2017), 930.

- Timo Götzelmann. 2016. LucentMaps: 3D Printed Audiovisual Tactile Maps for Blind and Visually Impaired People. ASSETS ’16, 81-90.

- Leona Holloway, Kim Marriott, and Matthew Butler. 2018. Accessible Maps for the Blind: Comparing 3D Printed Models with Tactile Graphics. CHI ’18, 1-13.

- Shaun K. Kane and Jeffrey P. Bigham. 2014. Tracking @stemxcomet: Teaching Programming to Blind Students via 3D Printing, Crisis Management, and Twitter. SIGCSE ’14, 247-252.

- Jill Keefe. 2005. Psychosocial impact of vision impairment. International Congress Series 1282 (2005), 167-173.

- Steven Landau. 2009. An Interactive Talking Campus Model at Carroll Center for the Blind. Touch Graphics. (2009).

- Samantha McDonald, Joshua Dutterer, Ali Abdolrahmani, Shaun K. Kane, and Amy Hurst. 2014. Tactile Aids for Visually Impaired Graphical Design Education. ASSETS ’14, 275-276.

- Roxana Moreno, Richard E. Mayer, Hiller A. Spires, and James C. Lester. 2001. The Case for Social Agency in Computer-Based Teaching: Do Students Learn More Deeply When They Interact With Animated Pedagogical Agents? Cognition and Instruction 19, 2 (2001), 177-213.

- National Federation of the Blind. 2009. The Braille Literacy Crisis in America: Facing the Truth, Reversing the Trend, Empowering the Blind. (2009).

- Andreas Reichinger, Anton Fuhrmann, Stefan Maierhofer, and Werner Purgathofer. 2016. Gesture-Based Interactive Audio Guide on Tactile Reliefs. ASSETS ’16, 91-100.

- Samuel Reinders, Matthew Butler, and Kim Marriott. 2020. “Hey Model!” – Natural User Interactions and Agency in Accessible Interactive 3D Models. CHI ’20, 1-13.

- Rebecca Sheffield. 2016. International Approaches to Rehabilitation Programs for Adults who are Blind or Visually Impaired: Delivery Models, Services, Challenges and Trends.

- Lei Shi, Holly Lawson, Zhuohao Zhang, and Shiri Azenkot. 2019. Designing Interactive 3D Printed Models with Teachers of the Visually Impaired. CHI ’19, 1-14.

- Lei Shi, Idan Zelzer, Catherine Feng, and Shiri Azenkot. 2016. Tickers and Talker: An Accessible Labeling Toolkit for 3D Printed Models. CHI ’16, 4896-4907.

- Candace L. Sidner, Timothy Bickmore, Bahador Nooraie, Charles Rich, Lazlo Ring, Mahni Shayganfar, and Laura Vardoulakis. 2018. Creating New Technologies for Companionable Agents to Support Isolated Older Adults. TiiS ’18, 1-27.