SpokeSense: Developing a Real-Time Sensing Platform for Wheelchair Sports

Patrick Carrington, Carnegie Mellon University, pcarring@cs.cmu.eduGierad Laput, Apple, gierad@apple.com

Jeffrey P. Bigham, Carnegie Mellon University, jbigham@cs.cmu.edu

Abstract

SpokeSense is an easy-to-install sensing solution intended for wheelchair basketball players. Our aim with SpokeSense is to provide support for both long-term and real-time analysis of performance information for wheelchair court sport athletes. We developed and tested SpokeSense in the context of wheelchair basketball. We extend prior work quantifying wheelchair court-sport activity by enabling real-time review of performance data and other important events as well as supporting long-term logging. Our secondary focus was on creating a means of tracking activity that would be accurate, safe to use, and practical. In this article, we discuss the design of our sensor to support practical use during wheelchair basketball activities, provide access to relevant accurate data, and support real-time and post-hoc review. We discuss the performance of our sensor and the initial interface to support data review. Finally, we discuss broader implications for the design of assistive technologies for physical activity.1. Introduction

To achieve high athletic performance, athletes and coaches need relevant and actionable data. As a result, substantial work has gone into sensing and conveying information regarding athletic performance in mainstream sports, e.g., basketball, football, track & field, etc. Relatively little work has considered wheelchair sports, which are increasingly popular and present a number of new sensing challenges. First, athletes participating in wheelchair sports do not take steps [5], unlike athletes in many other sports, and so the sensing challenges are different. Second, the form factors of acceptable sensing solutions are different (e.g., wheelchair athletes generally cannot wear wrist-worn devices during competition). Finally, wheelchair athletes care about different aspects of their experience and performance, even when playing the same sport. What wheelchair athletes care about is different and likely needs to be sensed differently as compared to more traditional sports.

As wheelchair sports have become more popular, new assistive technologies have been developed to improve performance, especially for court sports, like basketball, rugby, and tennis. Better physical wheelchair improvements and customizations for athletes can lead to improved performance [1]. Experts have a growing interest in facilitating these changes especially regarding Paralympic sports [8].

Quantifying these activities has been challenging for a number of reasons, including accuracy, safety, and practicality. The current state of the art for these assistive devices is to use miniaturized data loggers (MDLs) to collect and store motion information over long periods of time (e.g., months). This information can be especially valuable to practitioners including coaches and trainers to modify future training procedures [10]. Prior research has explored the limitations of MDLs to capture accurate representations of the activities of wheelchair athletes. The MDL has been shown to be useful for estimating speeds and distance traveled in wheelchair sports. However, the MDL exhibits reduced accuracy during peak activity intervals for speed and distance [7]. Another limitation that we have found for the use of MDLs in practice is that data is only collected and stored by the sensor, which has benefits to battery life and longevity. As a result, data can only be reviewed long after the activity has been performed. While beneficial for long term adjustments to training programs, this limits the MDLs ability to support in-the-moment changes or adjustments.

2. SpokeSense

2.1 Design Requirements - Related Work and Practical Constraints

Trackers and loggers for physical activity have often been limited in their ability to understand their own context of use and instead rely on the user to contextualize their own data. This approach is quite useful for many general use devices, however, some of this burden can be lifted during wheelchair sports by leveraging the type of wheelchair being used.

Sensors attached to the wheel have the distinct advantage of sensing wheelchair motions directly (e.g. rotations of the wheel). Coulter et al.[6] and Sonenblum et al. [14] explored the use of accelerometers to measure wheelchair propulsion and kinematics. Shepherd et al.[13] provide guidelines for creating propulsion measurement systems for wheelchair court sports based on existing literature discussing approaches for the use of inertial measurement units (IMUs). Multiple projects have used IMUs to provide accurate measurements of distance, speed, angular velocity (rotation of the wheel), and even to estimate position [15,16,17]. The majority of these solutions have attached sensors to the wheel, the wheelchair frame [15], or a combination of both [16,17]. Other projects considered wearable devices (wrist, arm, etc.) to capture the activities of wheelchair users [12]. These solutions have shown potential to understand more about users’ stroke patterns, exertion, and other physical activities. While both wearable and wheelchair-based approaches are viable, sensing on the wheel is more direct for the movement information we are interested in [6,13]. In addition, Shepherd et al. [13] suggest that wheel-attached sensing with multiple IMUs is an ideal form factor, especially since our aim is to support the measurement of more advanced kinetic features.

SpokeSense leverages both physical and sensed contextual information to provide support for performance-driven reflection, achieved through the forced physical context of the basketball wheelchair and using sensing to detect events of interest in the surrounding environment.

2.2 Implementation and System Description

Our aim was to create a sensing solution that would be lightweight and easy to use. Our system uses two wheel-mounted sensors, which we call Spokes, to capture motion and audio data from the wheelchair. The sensors stream over WiFi to a host computer to analyze and present the data.

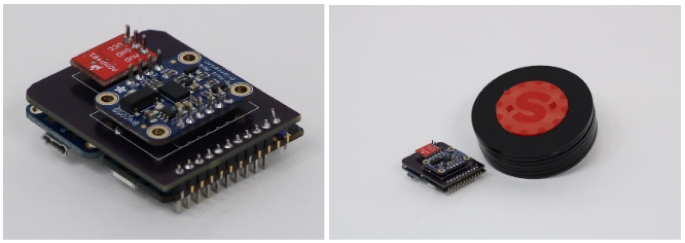

We decided to use wheel-mounted sensors as they would provide the most relevant data, be consistent with existing wheelchair kinematics sensing solutions, and would blend with the design of the wheelchair. We considered the on-site assembly process of using a basketball wheelchair when deciding on this sensor location. Many solutions described in the literature elect an off-center position on the wheel to mount the sensor. In considering the function of the sensors and the aesthetics of our design, as in [4,11], we decided on a hubcap style design which positions the sensors over the wheel hubs.

The attachment and removal of our sensors should be a simple and natural step in the process of using the basketball wheelchair as athletes are accustomed to attaching and detaching their wheels. We made the choice to deliberately restrict access to the quick-release axle considering the removal and reattachment of the sensors along with changing wheels. We reasoned that this would be a realistic scenario given that a player may own multiple wheels but it may not be practical to have multiple sets of sensors for each set of wheels.

Each Spoke consists of 4 components: 1) a Particle Photon STM32F205 microcontroller with a 120MHz CPU, 2) an Adafruit BNO055 Absolute Orientation Sensor (9DOF IMU), 3) an ADMP401 MEMS Microphone, and 4) an Adafruit Powerboost 500 to support the LiPo battery. Each of these components (besides the battery) is mounted to a custom printed circuit to reduce the physical footprint of the different breakout boards. The spoke chip is shown in Figure 1.

The IMU calibrates itself using built-in Adafruit libraries once it is attached to the wheel. The only input that is required from the user for calibration is the size of the wheel (diameter) and the distance between the wheels where they contact the floor. The data collected by the sensors is processed in two stages: 1) by the sensing module and microcontroller and 2) by the host device. The microcontroller converts the raw sensor values into appropriate units for each sensor. The photon then transmits the data over WiFi. The SpokeSense application combines and analyzes the sensor data to provide feedback regarding the wheelchair user’s movements.

2.3 Core Metrics and Mid-Level Insights

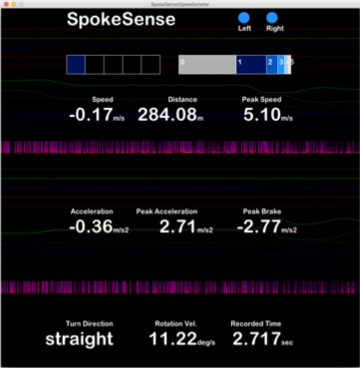

SpokeSense provides feedback at two levels: the first is core metrics, which are direct measurements relating to the motion of the chair and mid-level insights, which aim to support a better understanding of an athlete's activities. The core metrics supported by SpokeSense are speed, distance, and acceleration.

We define Mid-Level insight as a step beyond core metrics, toward insight and qualitative or contextual feedback. Specifically, we detect and incorporate speed zones, orientation change events, and contextual audio clues that may lead to a clearer picture of in-game events.

Speed Zones. We adapted the relative speed zones utilized by Mason et al. [10] originally defined by Cahill et al. [2]. These zones were used to analyze the data collected from the sensors. We extend this work by providing real-time feedback regarding these speed zones. This resulted in six (6) zones, relative to the individual’s maximum speed (Smax):

- 0% of Smax or "Non-Motion"

- < 20% of Smax is "Very Low"

- Between 21 - 50% of Smax is "Low"

- Between 51 - 80% of Smax is "Moderate"

- Between 81 - 94% of Smax is "High"

- > 95% of Smax is "Very High"

Our application continuously assesses the athlete’s performance with respect to speed zones and visualizes the athlete's performance based on the proportion of time spent in each zone during the current recording session. This information is presented in real time as both an active speed zone and a dynamic representation of time spent in each zone with updates in real-time (Figure 2). We hypothesized that providing feedback regarding these speed zones could be used to represent the level of effort exerted by a player, given that maintaining their maximum speed likely requires a significant amount of effort.

Orientation Change Events: Tilting and Tipping. Since the wheelchair has a relatively restricted motion profile (e.g. the wheels rotate to create linear motion), motion that does not occur only as a result of rotating the wheels can easily be identified. We are able to use the 9-DOF IMU to filter out motion and determine the gravity vector for each wheel. We can sense changes to this gravity vector and infer different types of motions produced by the wheelchair including tilting and tipping. Tilting occurs as a voluntary action of the player in which they may balance themselves on two of the wheels (one main and one front caster) in order to reach higher or avoid an obstacle. We define tipping as the wheelchair tipping over to a position where it is no longer upright and must be righted. The gravity vector allows us to infer the orientation of each wheel in relation to the ground. Changes in the gravity vector of a certain magnitude can indicate the magnitude and direction of a tilt, as well as help to identify a situation where the wheelchair has likely tipped over. Sensing these tilts vs tipping can be used either as a tool to improve a person’s ability to perform tilts or to recognize when tipping has occurred during various activities or in a competitive situation.

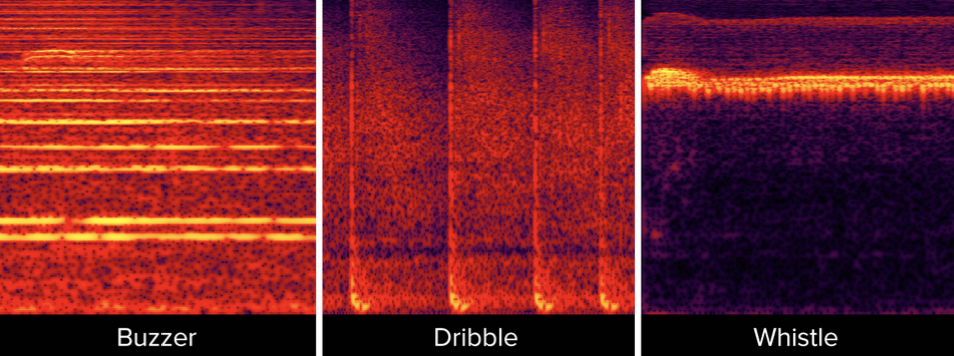

Contextual Clues. We incorporated a microphone into our sensor design in order to enable capturing of contextual factors for playing the game. We were initially interested in being able to recognize three important and recognizable sounds of the game: dribbling, the referee’s whistle, and the game buzzer. The sound of a dribbling basketball can be used as an indicator of "action" in a basketball game. Although we demonstrate that it is possible to recognize that a basketball is directly being dribbled by a player (at a certain level of accuracy), in wheelchair basketball, it is equally valuable to understand a player’s proximity to the "action" simply based on the sound of a dribbling ball, which makes this metric even more relevant.

The referee's whistle is also a key indicator of in-game rule enforcement and mechanics (fouls, out of bounds, stop/start the clock, etc.) during the course of a basketball game. While well-trained referees may use similar patterns (e.g. short whistle bursts indicate urgency and for everyone to stop and pay attention), the basic meaning associated with the whistle can be conceptualized to indicate that a) something has happened, b) pay attention to the referee who blew the whistle, and c) wait for the next whistle before resuming the game. Recognizing the whistle could enable the system to intelligently mark or filter events of interest during a game.

Finally, the sound of the buzzer can usually be heard in two or three variations which we identify as burst, short, and long buzzer. The buzzer is sounded typically to indicate the expiration of a time period; usually the shot clock or a period of play. The buzzer is also traditionally used to indicate when waiting substitutions can trade places with active players. The buzzer sound may be used to mark the boundaries of periods of activity.

2.4 System Performance

2.4.1 Motion Accuracy

The accuracy of using the Gyroscope to measure wheelchair activities [17] has proven to be reasonably accurate for distance and speed (<5% error). We conducted a brief study to verify the accuracy of our system with regard to speed, distance, and acceleration. We recruited 21 participants for the accuracy tests. This included seven non-player, university students without disabilities who showed an interest in wheelchair basketball and/or had participated in recreational wheelchair basketball games, one wheelchair basketball player from a local NWBA team who participated during a weekly practice, and thirteen players from the 2018 National Wheelchair Basketball Tournament (NWBT).

The accuracy tests involved propulsion tasks where participants either used their own wheelchair or a Per4Max Thunder basketball wheelchair, with a standard 15 degree wheel camber and 27-inch Spinergy wheels. The tasks involved propelling straight forward for a distance of between 8 and 12.5 meters, depending on the test setting (Table 1). The results from these propulsion tests are summarized in Table 2.

| Test | Propulsion Task | Participants |

| a | Propelling straight forward (8m) on a hardwood surface using our basketball wheelchair | 7 Non-players |

| b | Propelling straight forward (12.5m) on a basketball court using their own wheelchair | 1 Player |

| c | Propelling straight forward (~9m) on a concrete surface using either wheelchair | 13 Players |

| Test | Absolute Error (%) | Mean Percentage Error (std. dev.) | Measurement Error (%) |

| Lab Test (a) | .57 | .01 (5.42) | 2.05 |

| Field Test (b & c) | 13.41 | 13.35 (11.88) | 3.17 |

2.4.2 Audio Accuracy

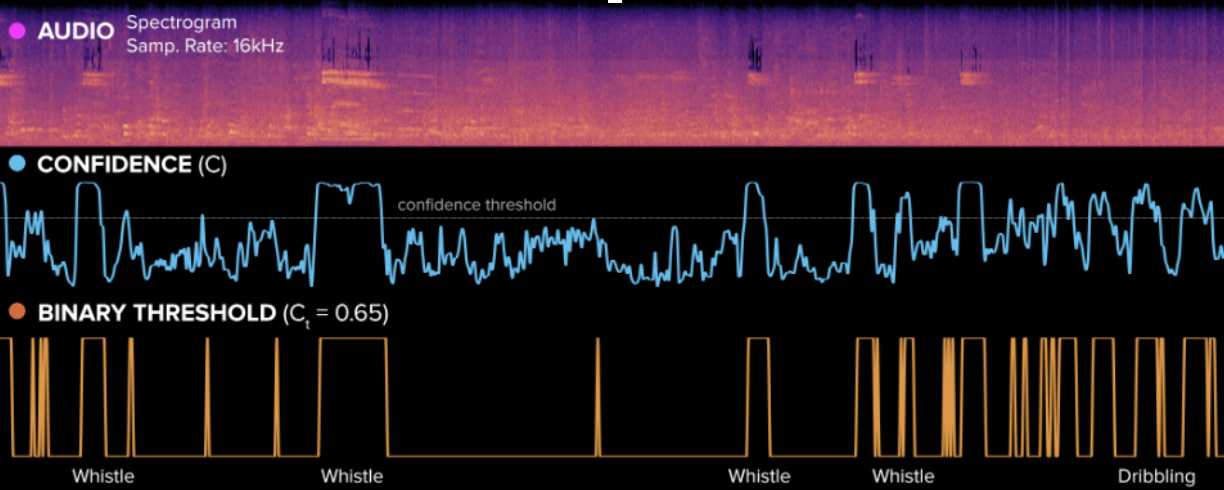

To automatically detect in-game events, we built a detection system that leverages our sensor’s microphone. We created a variant of Laput et. al's Ubicoustics system [9], tuning it specifically for our events of interest (i.e., whistle, dribble, and buzzer) derived from multiple data sources. First, our dataset consists of audio events we collected during the National Wheelchair Basketball Tournament, manually segmented and annotated by our research team. We also employed Laput’s method for leveraging sound effect libraries (plus data augmentations) to create an even bigger corpus without additional in-situ collection. Figure 3 shows an example visualization of various in-game events and their computed acoustic features. Figure 4 illustrates this auto-segmentation process. In our experiments, we learned that a threshold value of 65% was sufficient in detecting 90%+ of events while still minimizing false triggers.

### Figure 3 and 4

Overall, our machine learning model, trained on prior games and tested on audio events from the Division I championship game, achieved an average clip-level accuracy of 98.9%. This result is quite promising, suggesting that audio could be a high-fidelity signal source for automatically detecting contextual events in a wheelchair basketball game. To further push the limits of our machine learning model, we injected foreign sounds into our testing dataset to monitor its robustness. Specifically, we added related events e.g., "people chattering", "baby crying", "phone ringing," (among others) to the testing set (mined from an external corpus), and we monitored how SpokeSense would classify these events. Ideally, they would be tagged as "other sounds" (i.e., negative events) and not one of our target events. Overall, the system achieved a similar accuracy of 98.1%, indicating that in-game sounds are distinctive enough even when the model is exposed to adversarial audio events. Future work still remains, but these results offer a promising foundation for leveraging in-game sounds as an additional contextual channel for data-driven wheelchair basketball.

Open Challenges

In this article, we present SpokeSense, a wheelchair-based sensing solution designed to track and visualize the activities of wheelchair basketball. SpokeSense uses two wheel-based sensors, spokes, to record the motions of the wheelchair and identify other basketball-related events in the environment. We evaluated the accuracy of our sensors and demonstrated SpokeSense’s ability to provide relevant, accurate, and timely feedback to wheelchair basketball players and coaches. However, based on our studies using SpokeSense we introduce these five open challenges:

- How to support data sharing. Much of the opportunity around data sharing stems from the sense of connection present in this community. While individual preference is still prioritized, players and teams overall are interested in sharing their data in support of the broader community. Real-time data capture can better support comparisons between players with similar abilities and casual comparisons with elite athletes. Our prior work has focused on wheelchair basketball however, the implications are similar for other wheelchair and adaptive sports [5]. Technology has the potential to facilitate connectedness between typically very physically and geographically constrained communities.

- How to optimize a sensing platform robust enough to capture the general and individual complexities of different wheelchair sports. In general, the movements of the wheelchair are relatively straightforward given that a person operates the chair mechanically in virtually the same way. However, different sports may require motions that are more or less mechanical in nature and rely on the body in different ways. In addition, the physical stress placed on the device in different environments can be drastically different. Consider an individual race where the material limits of the chair are tested vs. a competition like wheelchair rugby where the device may need to endure direct physical contact. This presents challenges across domains including signal processing as well as material choice and optimization.

- How to ensure the values and identities of the athletes, players, coaches, and spectators are not sacrificed in the pursuit of a generalized solution. Physical activity monitors that are designed for the general public can sometimes present an overly normative view of what is healthy or desirable, whether this is intentional or not. In traditional elite sports, the idea of conforming to a certain idealized view is often accepted as the norm. However, there is a growing body of work, including in the assistive technology space, that suggests instead leveraging the possibilities of personalization to support individuality.

- How to integrate with existing technical and non-technical solutions in use by athletes and teams. Discussions with coaches and players alike revealed that there is often enough time between competitions to facilitate analysis and review of past performances. However, this includes an implicit tradeoff between time spent on analysis vs time spent taking action and making adjustments for the future.

- How to navigate clearance for device use in games. It is one thing to test our prototype for internal and research purposes. When imagining the use of devices such as SpokeSense in routine practice and games there are other complexities to navigate including social factors and fairness.

Conclusion

We describe SpokeSense, an easy-to-install sensing solution intended for wheelchair basketball players. With SpokeSense, we provide support for both long-term and real-time analysis of performance information for wheelchair court sport athletes. Our overall goal was to leverage our sensor to support practical use during wheelchair basketball activities, primarily by providing access to relevant data while supporting both real-time and offline review. In this instance, leveraging the wheelchair creates more opportunities to utilize the features offered by the technology. We discussed the potential of SpokeSense to transform and enhance performance awareness for wheelchair athletes, to broadly impact activities surrounding wheelchair sports, and to move wheelchair sports closer to the analytics-driven future of competitive sports.

Acknowledgments

We thank all the participants, Per4Max Medical, the Pittsburgh Steelwheelers, and the National Wheelchair Basketball Association (NWBA) for their support of our work. Aspects of this work were also developed under a grant from the National Institute on Disability, Independent Living, and Rehabilitation Research (NIDILRR grant number 90DP0061).

References

- Brendan Burkett. 2010. Technology in Paralympic sport: performance enhancement or essential for performance? British journal of sports medicine 44, 3 (2010), 215–220.

- Nicola Cahill, Kevin Lamb, Paul Worsfold, Roy Headey, and Stafford Murray. 2013. The movement characteristics of English Premiership rugby union players. Journal of Sports Sciences 31, 3 (2013), 229–237.

- Patrick Carrington, Kevin Chang, Helena Mentis, and Amy Hurst. 2015. "But, I Don’t Take Steps": Examining the Inaccessibility of Fitness Trackers for Wheelchair Athletes. In Proceedings of the 17th International ACM SIGACCESS Conference on Computers & Accessibility (ASSETS'15). ACM, New York, NY, USA, 193–201. https://doi.org/10.1145/2700648.2809845

- Patrick Carrington, Amy Hurst, and Shaun K Kane. 2014. Wearables and chairables: inclusive design of mobile input and output techniques for power wheelchair users. In Proceedings of the 32nd annual ACM conference on Human factors in computing systems. ACM, 3103–3112.

- Patrick Carrington, Gierad Laput, and Jeffrey P Bigham. 2018 (to appear). Exploring the Data Tracking and Sharing Preferences of Wheelchair Athletes. In Proceedings of the 20th International ACM SIGACCESS Conference on Computers and Accessibility (ASSETS'18).

- Elaine H Coulter, Philippa M Dall, Lynn Rochester, Jon P Hasler, and Malcolm H Granat. 2011. Development and validation of a physical activity monitor for use on a wheelchair. Spinal Cord 49, 3 (2011), 445.

- Shivayogi V Hiremath, Dan Ding, and Rory A Cooper. 2013. Development and evaluation of a gyroscope-based wheel rotation monitor for manual wheelchair users. The journal of spinal cord medicine 36, 4 (2013), 347–356.

- Justin WL Keogh. 2011. Paralympic sport: an emerging area for research and consultancy in sports biomechanics. Sports Biomechanics 10, 3 (2011), 234–253.

- Gierad Laput, Karan Ahuja, Mayank Goel, and Chris Harrison. 2018. Ubicoustics: Plug-and-Play Acoustic Activity Recognition. In Proceedings of the 31st Annual ACM Symposium on User Interface Software Technology (UIST'18). ACM, New York, NY, USA.

- Barry Mason, John Lenton, James Rhodes, Rory Cooper, and Victoria Goosey-Tolfrey. 2014. Comparing the activity profiles of wheelchair rugby using a miniaturised data logger and radio-frequency tracking system. BioMed Research International 2014 (2014).

- Dafne Zuleima Morgado Ramirez and Catherine Holloway. 2017. "But, I Don’T Want/Need a Power Wheelchair": Toward Accessible Power Assistance for Manual Wheelchairs. In Proceedings of the 19th International ACM SIGACCESS Conference on Computers and Accessibility (ASSETS'17). ACM, New York, NY, USA, 120–129. https://doi.org/10.1145/3132525.3132529

- Manoela Ojeda and Dan Ding. 2014. Temporal parameters estimation for wheelchair propulsion using wearable sensors. BioMed research International 2014 (2014).

- Jonathan B Shepherd, Daniel A James, Hugo G Espinosa, David V Thiel, and David D Rowlands. 2018. A Literature Review Informing an Operational Guideline for Inertial Sensor Propulsion Measurement in Wheelchair Court Sports. Sports 6, 2 (2018), 34.

- Sharon Eve Sonenblum, Stephen Sprigle, Jayme Caspall, and Ricardo Lopez. 2012. Validation of an accelerometer-based method to measure the use of manual wheelchairs. Medical engineering & physics 34, 6 (2012), 781–786.

- Clara Cristina Usma-Alvarez, Julian Jang Ching Chua, Franz Konstantin Fuss, Aleksandar Subic, and Michael Burton. 2010. Advanced performance analysis of the Illinois agility test based on the tangential velocity and turning radius in wheelchair rugby athletes. Sports Technology 3, 3 (2010), 204–214.

- RMA Van Der Slikke, MAM Berger, DJJ Bregman, AH Lagerberg, and HEJ Veeger. 2015. Opportunities for measuring wheelchair kinematics in match settings; reliability of a three inertial sensor configuration. Journal of Biomechanics 48, 12 (2015), 3398–3405.

- RMA Van der Slikke, MAM Berger, DJJ Bregman, and HEJ Veeger. 2015. Wheel skid correction is a prerequisite to reliably measure wheelchair sports kinematics based on inertial sensors. Procedia Engineering 112 (2015), 207–212.