Teachable Object Recognizers for the Blind: Using First-Person Vision

Kyungjun Lee, Department of Computer Science, University of Maryland, College Park, kjlee@cs.umd.eduAbstract

Advances in artificial intelligence and computer vision hold great promises for increasing access to information and improving the quality of life for people with visual impairments. However, these recent technologies require an abundance of training data, which are typically obtained from sighted users. These training images tend to have different characteristics from images taken by blind users due to inherent differences in camera manipulation. My research focus is to build a teachable object recognizer, where blind users can actively train the underlying model with objects in proximity to their hand(s). Thus, the datasets used in my project are more representative of the blind population. In this article, I describe my research motivations and goals, and summarize my research plan.1. Motivation

Advances in artificial intelligence, such as deep learning, have shown promising performance in a wide range of applications in computer vision and natural language processing. The human-computer interaction and accessibility communities have been quick to adapt these advances for assistive technologies that support and benefit people with visual impairments [4, 5, 6, 12, 15].

Although deep learning models require abundant data for training, prior work on transfer learning [3, 13] shows that previously-learned knowledge in object categorization can be utilized to classify new object classes even with a few images of them. Kacorri et al. [4] demonstrate that teachable object recognizers built with deep learning models can enable blind users to personalize the object recognition with a few snapshots of objects of interest and their custom labels. Their study findings indicate that personal object recognizers trained by the users on a few object instances can be more robust than a generic state-of-the-art object recognizer trained on broader image classes. They also observed that blind participants tend to use one of their hands as a guiding reference point for the camera when taking photos. My research delves further into this remark, as one of the main challenges for blind users in training a personal object recognizer is to inspect their training examples and ensure that the target object is included.

Furthermore, an object recognizer should allow blind users to teach the recognizer as they use it; i.e., they should be able to personalize the recognizer. For the personalization, teachable interfaces involving a human “in the loop” are essential in designing such assistive deep-learning-based applications. Cheng et al. [1] show that combining human judgments and machine learning techniques enhances the performance of classifiers. Current human-in-the-loop approaches in machine learning, however, do not consider people with disabilities. For example, Google Clips, which is the wireless smart camera trained as being used, involves human interactions to learn about features of momentous photos to users. Despite its potential in accessibility for people with visual impairments, the device inherently hinders them to use it because of its vision-based interactions. Motivated by these facts, I plan to make teachable interfaces inclusive for blind people.

2. Research Goals

My research begins by asking the following question, Q1: how to ensure that an object of interest is in images when blind users are training their object recognizer? When the users are training their object recognizer, the training can be effectively done if the target object is salient in the images. For blind users, however, it is difficult to check if the object of interest is included in the training examples. My approach to this issue is to first find user's hands in images and then localize an object appearing in the vicinity of the hands. A similar approach was also adopted by Ma et al. [9], and supported in Kacorri et al. study [5]; specifically, they observed that blind participants tend to either place their hand in proximity to the object or hold the object when taking photos. Leveraging prior work on image classification and segmentation (e.g., [7, 8, 10, 14]), my work will investigate the effectiveness of these models for blind users through user studies with the intended population.

My second research question is Q2: how to improve teachable interfaces for blind users? To explore this question, I will find effective ways to adapt and extend existing human-in-the-loop approaches, which typically do not account for individuals with visual impairments, so as to enable these users to train assistive technologies that incorporate computer vision models.

To answer these research questions, I have tentatively planned my research works as follows:

- Build a deep learning model that recognizes an object of interest with respect to the pose and location of user's hand(s).

- Train the model based on existing datasets (e.g., GTEA [2]) that consist of first-person images including hands that are interacting with some objects.

- Design and conduct user studies with blind and sighted participants to evaluate the model.

- Design teachable interfaces for combining personal object recognizer with wearable cameras.

- Evaluate the teachable interfaces through user studies with people with visual impairments.

3. Current Progress and Future Work

I completed the qualifying course requirements for my Ph.D. program during my first two years. Since Spring 2018, I have been working on my first research question, Q1. In particular, I have i) familiarized myself with prior work on machine learning techniques for accessibility and deep learning models in computer vision applications, ii) searched existing datasets that contain first-person images or videos, iii) developed an initial deep learning model that segments hand(s) from images, and iv) fine-tuned the hand segmentation model to estimate the location of an object of interest with respect to the pose and location of the user's hand(s).

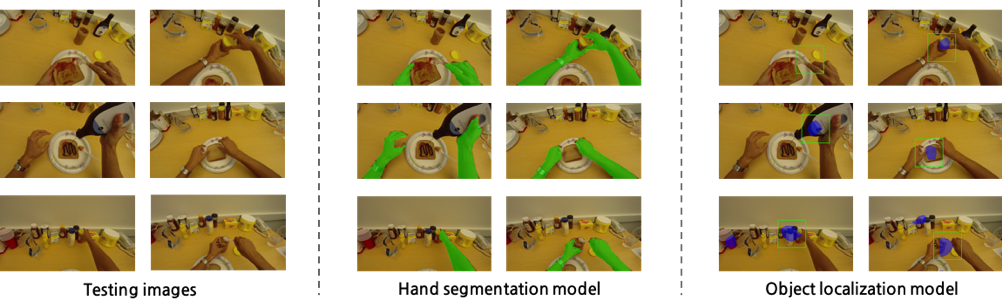

In detail, I developed a deep learning model that leverages the fact that objects of interest appear in the vicinity of user's hand(s) using similar methods to the ones proposed in [9]. For training and testing the model, I used the first-person images from the GTEA dataset [2]. First, I trained the model to segment hand(s) from the images so that the model would learn the distribution biased on the hand(s). To estimate possible center locations of an object according to the location and pose of the hand(s), I employed the transfer learning method [11] to fine-tune the hand segmentation model. Figure 1 shows the outputs of the hand segmentation model and the object localization model given the testing images. As shown, our localization model well estimates the location of an object of interest conditioned on hand(s) on the test images from the same GTEA dataset.

Based on the localization output, I crop the images that contain only the object of interest, and use them to train a recognition model. Next, I will assess its performance and generalizability through user studies with blind participants. This past summer, I worked with a blind research member in our lab to evaluate the recognition model in controlled environments and collect egocentric images/videos that can serve as a testbed. Our TEgO dataset and preliminary results of our analysis indicate that this approach can help with cluttered environments [16]. In the upcoming academic year, I will focus on the aforementioned user studies with a larger user pool and the exploration of wearable teachable interfaces for our object recognizer.

4. Contributions

Advances in deep learning can benefit people with visual impairments by increasing the accuracy of assistive technologies using computer vision. The main contribution of my work will be the design of a teachable object recognizer for blind users that understands users' activities in the first-person view and recognizes the object that the users are interacting with. By evaluating this approach, I believe that we can gain better insight into the design and benefits of the teachable interfaces, and assess the compatibility of the existing datasets from sighted people and new datasets from blind people. I expect that egocentric data collected during our model evaluation would serve as new resources for future researchers working on similar accessibility challenges.Acknowledgments

I would like to thank the 2018 ASSETS Doctoral Consortium Committee for their valuable feedback and my Ph.D. advisor, Dr. Hernisa Kacorri, for her mentoring and support. This work is supported by NIDILRR (#90REGE0008).References

- Cheng, J. and Bernstein, M.S., 2015, February. Flock: Hybrid crowd-machine learning classifiers. In Proceedings of the 18th ACM conference on computer supported cooperative work & social computing (pp. 600-611). ACM.

- Fathi, A., Ren, X. and Rehg, J.M., 2011, June. Learning to recognize objects in egocentric activities. In Computer Vision and Pattern Recognition (CVPR), 2011 IEEE Conference On(pp. 3281-3288). IEEE.

- Fei-Fei, L., Fergus, R. and Perona, P., 2006. One-shot learning of object categories. IEEE transactions on pattern analysis and machine intelligence, 28(4), pp.594-611.

- Kacorri, H., 2017. Teachable machines for accessibility. ACM SIGACCESS Accessibility and Computing, (119), pp.10-18.

- Kacorri, H., Kitani, K.M., Bigham, J.P. and Asakawa, C., 2017, May. People with visual impairment training personal object recognizers: Feasibility and challenges. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems (pp. 5839-5849). ACM.

- Kacorri, H., Ohn-Bar, E., Kitani, K.M. and Asakawa, C., 2018, April. Environmental Factors in Indoor Navigation Based on Real-World Trajectories of Blind Users. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems (p. 56). ACM.

- Krizhevsky, A., Sutskever, I. and Hinton, G.E., 2012. Imagenet classification with deep convolutional neural networks. In Advances in neural information processing systems (pp. 1097-1105).

- LeCun, Y., Bengio, Y. and Hinton, G., 2015. Deep learning. nature, 521(7553), p.436.

- Ma, M., Fan, H. and Kitani, K.M., 2016. Going deeper into first-person activity recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (pp. 1894-1903).

- Nowlan, S.J. and Platt, J.C., 1995. A convolutional neural network hand tracker. Advances in neural information processing systems, pp.901-908.

- Oquab, M., Bottou, L., Laptev, I. and Sivic, J., 2014. Learning and transferring mid-level image representations using convolutional neural networks. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 1717-1724).

- Poggi, M. and Mattoccia, S., 2016, June. A wearable mobility aid for the visually impaired based on embedded 3d vision and deep learning. In Computers and Communication (ISCC), 2016 IEEE Symposium on (pp. 208-213). IEEE.

- Romera-Paredes, B. and Torr, P., 2015, June. An embarrassingly simple approach to zero-shot learning. In International Conference on Machine Learning (pp. 2152-2161).

- Szegedy, C., Liu, W., Jia, Y., Sermanet, P., Reed, S., Anguelov, D., Erhan, D., Vanhoucke, V. and Rabinovich, A., 2015. Going deeper with convolutions. In Proceedings of the IEEE conference on computer vision and pattern recognition(pp. 1-9).

- Zhao, Y., Wu, S., Reynolds, L. and Azenkot, S., 2018, April. A Face Recognition Application for People with Visual Impairments: Understanding Use Beyond the Lab. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems (p. 215). ACM.

- Lee, K. and Kacorri, H., 2019, May. Hands Holding Clues for Object Recognition in Teachable Machines. To appear in Proceedings of the 2019 CHI conference on Human Factors in Computing Systems. ACM.