Autism and the Web: Using Web-searching Tasks to Detect Autism and Improve Web Accessibility

Victoria Yaneva, University of Wolverhampton, UK, v.yaneva@wlv.ac.ukLe An Ha, University of Wolverhampton, UK, l.a.ha@wlv.ac.uk

Sukru Eraslan, Middle East Technical University, Northern Cyprus, seraslan@metu.edu.tr

Yeliz Yesilada, Middle East Technical University, Northern Cyprus, yyeliz@metu.edu.tr

Abstract

People with autism consistently exhibit different attention-shifting patterns compared to neurotypical people. Research has shown that these differences can be successfully captured using eye tracking. In this paper, we summarise our recent research on using gaze data from web-related tasks to address two problems: improving web accessibility for people with autism and detecting autism automatically. We first examine the way a group of participants with autism and a control group process the visual information from web pages and provide empirical evidence of different visual searching strategies. We then use these differences in visual attention, to train a machine learning classifier which can successfully use the gaze data to distinguish between the two groups with an accuracy of 0.75. At the end of this paper we review the way forward to improving web accessibility and automatic autism detection, as well as the practical implications and alternatives for using eye tracking in these research areas.1. Introduction

In 2018, 75 years after the first mention of autism by Leo Kanner, our ability to recognise autism is still strikingly limited. In high-income countries, it is a common clinical experience for people to receive a late diagnosis, wrong diagnosis or no diagnosis, and in low-income countries this problem is further exacerbated by the lack of trained professionals with limited access to diagnostic tools. At the same time, accessibility solutions for people with autism regarding their use of the web are severely under-studied. Out of the many web accessibility guidelines for people with autism we reviewed, there was virtually no direct empirical evidence for the proposed requirements and needs, or user evaluation of any of the proposed solutions.

In our work we approach these two problems as one. To do that, we use eye tracking to record visual attention shifting whilst participants looked for information on web pages. We use the gaze data to analyse the differences in the way people with autism process the web pages compared to control participants and, in turn, we show that the differences in visual attention can be used to develop screening tools for autism.

In this paper, we first discuss visual attention in autism and how it can be captured through eye tracking (Section 2). We then briefly describe our data collection (Section 3), and how we use the gaze data to improve web accessibility (Section 4) and detect autism (Section 5). We conclude with a discussion of the future challenges and opportunities to addressing these two issues (Section 6).

2. Visual Attention in Autism

Autism is a neurodevelopmental disorder, characterised by impairment in communication and social interaction. While people with autism largely differ from one another in terms of the severity of their symptoms or the types of challenges they may encounter, there are certain common patterns which are applicable to most (although not all) people with autism. One of these commonalities is that attention shifting may work differently compared to the mechanisms generally recognised among neurotypical people (Frith, 2003).

Certain "bottom-up" approaches to the processing of visual information amongst people with autism include focus on small details, often to the exclusion of "the bigger picture", explained as a bias towards "processing local sensory information with less account for global, contextual and semantic information" (Happe and Frith, 2006). Other researchers report reliance on only one sensory modality (e.g. shape or colour), when several are relevant to a task (Lovaas and Schreibman, 1971). This phenomenon is known as "stimulus overselectivity" (Lovaas and Schreibman, 1971) or "tunnel vision" (Ploog, 2010). These differences in attention are known to affect the way some people with autism process images in text documents, whereby they would focus on the image significantly longer than control-group participants (Yaneva, Temnikova and Mitkov, 2015). Another study reports that the lexical properties of words have an effect on different durations of the viewing time per word in autistic and neurotypical readers (Stajner et al., 2017).

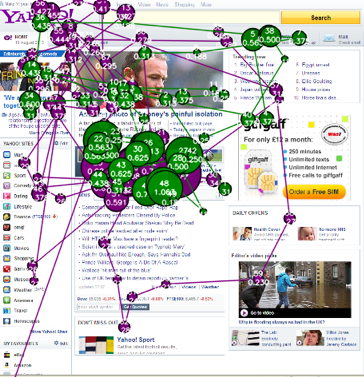

In our work, we investigated how the environment of web pages, with their many images, text paragraphs and creative formatting, influenced visual attention in people with autism and whether they processed the information within the pages differently compared to a control group. To investigate this we collected eye-tracking data from web-related tasks. Eye tracking is a process where an eye-tracking device measures the point of gaze of an eye (gaze fixation) or the motion of an eye (saccade) relative to the head and a computer screen (Duchowski, 2009). Examples of fixations and saccades forming a scan path can be seen in Figure 1. Gaze fixations and revisits (go-back fixations to a previously fixated object) have been widely used as measures of cognitive effort by taking into account their durations and the places where they occur (Duchowski, 2009).

3. Data Collection

We recorded eye-tracking data from 18 adult participants with high-functioning autism and 18 control participants with similar age and levels of education. Whilst looking at six web pages with varying visual complexity, the participants were asked to complete two tasks. In the first task, called Browsing, no specific purpose was defined and the participants were free to look at and read whatever they found interesting on each page for up to two minutes. The second task, called Searching, required the participants to answer two questions per web page and locate the correct answer on the screen within 30 seconds. It is important to note that all control-group participants were screened for autistic traits using the Autism Quotient test (Baron-Cohen et al., 2001) in order to make sure that none of them was on the spectrum without having received a formal diagnosis.

We then organised the elements of each web page into Areas of Interest (AOIs), as shown in Figure 2, and extract the gaze data for each AOI.

Full details about the data collection and data extraction can be found in Yaneva et al. (2018).

4. Using Gaze to Improve Web Accessibility

This section presents our work towards improving web accessibility for people with autism. The full technical details of this part of the analysis can be found in Eraslan et al. (2017).

4.1 Existing Web Accessibility Guidelines for Web Users with Autism

As mentioned in the Introduction, there is almost no empirical evidence of what the web accessibility requirements of people with autism are. Britto and Pizzolato (2016) collected 22 sets of autism-related web accessibility guidelines. After reviewing them it turns out that none are based on empirical research with people with autism. Instead, they are derived from sets of recommendations developed for people with low literacy (e.g. Darejeh and Singh, 2013) or cognitive disabilities in general (e.g. Friedman and Bryen, 2007). Even the most authoritative resource, the Cognitive Accessibility User Research issued by the WC3 Cognitive and Learning Disabilities Accessibility Task Force (Seeman and Cooper, 2015), is based on the ASD diagnostic criteria as a source of information for potential accessibility barriers and one interview with an anonymous user. In summary, current web accessibility guidelines for people with autism are characterized by two main drawbacks:

- They are usually derived by matching the diagnostic criteria to potential web accessibility barriers for people with autism, or through guidelines for people with low literacy or cognitive disabilities in general.

- The proposed accessibility solutions have never been evaluated.

4.2 Gaze as Evidence for Information Processing Differences

To address these issues, we analysed the gaze data from the web searching tasks, the collection of which was outlined in Section 2. This analysis revealed several important trends.

First, the participants with autism looked at more irrelevant elements compared to the control-group participants. This difference was significant for two out of the six web pages. We define irrelevant elements as ones whose purpose is peripheral to the main purpose of the web page and are also not relevant to the search tasks (e.g. elements K to R in Figure 2). The control group participants seemed to be better able to dismiss certain visual elements as irrelevant or to follow organisation cues and grasp the principle according to which a web page is structured.

Second, the lengths of the scan paths of the participants with autism were longer and they made more transitions between the elements of the web pages. Surprisingly, they had shorter fixations than the control group. According to the eye tracking literature (Ehmke and Wilson 2007), a longer scan path on a web page is related to less efficient searching and a higher number of transitions between separate elements is associated with uncertainty. On the other hand, the fixations in the ASD group were shorter, suggesting that they were scanning the elements at a higher pace. Compared the ASD group, the control group participants had a tendency to exclude certain elements as irrelevant, spend longer times for processing the relevant visual elements and thus grasp their meaning and complete the task in time. The participants with autism on the other hand paid less attention to the meaning of the individual elements (relevant or irrelevant) and used their time to scan as many elements as possible. They would often continue searching even after they had already seen the correct element but did not recognise it as such.

4.3 Improving the Web Accessibility Guidelines for People with Autism

These results are, to the best of our knowledge, the first empirical evidence for differences in the processing of web pages between people with and without autism. Based on our results, our main recommendation is to focus on reducing time and effort rather than providing different means for obtaining the information (as many of the reviewed guidelines propose). Web users with autism would require more time and more cognitive effort compared to neurotypical people. This implies that providing different means for obtaining the information such as additional media (e.g. videos or sound) may be distracting and future accessibility initiatives for users with high-functioning autism should focus on reducing cognitive load through removing unnecessary page elements. Having fewer elements would reduce cognitive load and timing for people with autism as they have a tendency to look at many elements and to examine web pages incrementally.

5. Detecting Autism Using Gaze Data

This section presents our work on automatic detection of autism using gaze data from web-related tasks. The full details of this study can be found in Yaneva et al. (2018).

5.1 Current Diagnostic Practices for Autism

There is currently no known biomarker for autism. As a result, the ASD diagnosis is based on behavioural, historical, and parent-report information and is ultimately dependent on subjective clinical judgement (Bernas, Aldenkamp, and Zinger, 2018; Falkmer et al., 2013). Davidovitch et al. (2015) report the story of a cohort of 221 children who had undergone a total of 1,028 evaluations before the age of six and the conclusions of this assessment were that these children were not on the autism spectrum. Subsequent assessments after the age of six however, refuted the initial conclusions and the children were eventually diagnosed with autism. The authors attribute these contradicting opinions to the complexity of the condition and the way symptoms are exhibited, as well as to "inadequate screening practices, inappropriate or delayed responses of physicians to parental concerns, low sensitivity of screening instruments for autism, and a general lack of awareness of autistic symptoms" (Davidovitch et al., 2015). Davidovitch's study illustrates the difficulty of diagnosing autism, even in cases where the behaviour of the children has been brought to the attention of clinicians.

A possible solution to these problems would be to provide an accessible and unobtrusive screening method for autism which relies on behavioural data from everyday tasks.

5.2 Developing Automatic Screening for Autism

To develop such a screening method, we used the gaze data described in Section 3. Our main hypothesis is that the different attention-shifting mechanisms of the two groups are revealed through the gaze data from the web-related tasks, and thus attention differences can be used as a marker of the condition.

In our experiments we used logistic regression to classify the participants into one of the two groups: ASD or control. We use the gaze data from both the Browsing and the Searching tasks. We evaluated the model through 100-fold cross validation, where data from 10 random participants per group is used for training and then the unseen data from the remaining 5 participants per group is used for testing. The models were trained using both gaze-based features such as number of fixations, number of revisits or time viewed, as well as non-gaze features such as the ID of the AOI or the web page, whether or not the AOI contained the correct answer to a task, the visual complexity of each web page, and the participant gender.

We also explored different approaches to defining the Areas of Interest (AOIs) on the classification performance, where we distinguish between task-specific and task-independent AOIs. The task-specific AOIs correspond to the elements of the web page, as presented in Figure 2. The task-independent AOIs refer to a simple 2x2 grid whether the page is divided into four equal squares.

5.3 Results from the Classification Experiments

The results from the classification experiments showed that, indeed, visual attention differences can be used to screen for autism. Best performance was achieved when using selected web pages. For the Searching task, the accuracy was 0.75 and for the Browsing task it was 0.71, where both classes were predicted with similar level of accuracy.

While participant gender and the level of visual complexity of the web pages did not influence the results, both the type of the tasks (Browsing and Searching) and the type of the AOIs (page-specific or generic) had an effect on the classification performance. For the task type, better performance was achieved using the data from the Searching task, possibly because answering specific questions elicited larger visual-attention differences and highlighted different problem-solving strategies between the two groups. Nevertheless, the data from the Browsing task also revealed differences, achieving classification accuracy of up to 0.71. In terms of AOIs, the way the data was extracted did not matter for the Browsing task, where both configurations reached accuracy of around 0.7. This was not the case for the Searching task however, where the 2 x 2 grid was significantly worse than the page-specific AOIs, reaching top accuracy of 0.56. A possible explanation for this is that for the Browsing tasks all areas had equal importance, while for the Searching task certain areas were more likely to contain the correct answer than others.

To the best of our knowledge, this is the first study to use gaze data for detecting autism. The broader impact of the results relates to the ideas that: i) visual attention could potentially be used as a marker of autism, ii) web-page processing tasks are a good stimulus set, and iii) performance on such tasks could be used to develop an affordable and accessible serious game for the detection of autism on a large scale.

6. Ways Forward

The use of visual-attention differences for automatic autism detection and improving web accessibility has so far given promising results and eye tracking holds significant potential in both these areas. In this section we discuss the advantages and disadvantages to using eye tracking, as well as the ways forward for improving automatic autism detection and the accessibility of the web.

6.1 Eye Tracking: Advantages, Disadvantages and Alternatives

Eye tracking provides valuable information and many data points, which is of great help when having access to a large number of participants is not feasible. Capturing visual attention differences through gaze recordings is a language-independent approach and is thus highly scalable. It is also encouraging that eye tracking is becoming widely available in mainstream applications such as Eye Control for Windows 10. After identifying specific attention differences which give a strong discrimination signal, these differences can be captured through means that do not require eye-tracking equipment. Nevertheless, until we reach that point, conducting eye-tracking studies requires access to an eye tracker and a time-consuming data collection process, which might be a practical challenge. At the same time, using other means of understanding the interactions between the participants and web pages, such as logs of mouse clicks, may have low sensitivity and require a large number of participants, which is also not always possible.

Alternatives to eye tracking that could potentially be used in autism detection and web accessibility research includes change blindness and exploring blurred images with a mouse pointer. In the first approach, visual attention is examined by introducing small changes to a scene and measuring the points at which these changes get noticed. The second approach is used to create heatmaps similar to the ones produced using eye trackers and is widely used in commercial research. We have yet to see whether these approaches to examining visual attention could be successfully applied to autism detection but for the moment they represent intriguing alternatives to traditional eye-tracking techniques.

6.2 Improving Autism Detection

With regards to autism detection, the two main challenges relate to: i) designing better tasks that would amplify the differences and ii) capturing these in a non-obtrusive, accessible way. So far in our studies web pages have proven to be a suitable "playground" for designing good tasks, as they represent a familiar everyday environment (as opposed to laboratory stimuli), contain a large number of diverse inputs (e.g. text, image, sound, video), and all interactions with the visual information are confined to the area of the screen. Potential ways for amplifying the differences include designing tasks not only related to visual attention but also to general information processing, analysis, synthesis, and inferencing. In our current work for example, we investigate inference tasks relating to synthesising information from different parts of the screen in order to arrive at a third piece of information that is implicit. The question of capturing the signal without the need of an eye tracker was partially discussed in the previous section but it is worth noting that once we are able to identify specific discriminatory features, these may later be targeted and captured through the development of a serious game for autism screening.

6.3 Improving Web Accessibility for People with Autism

So far we have provided empirical evidence that people with autism process the elements of web pages differently, but the main task of identifying ways to better adapt the pages remains unaddressed (Perez et al., 2017). Having fewer elements would certainly help reduce the cognitive effort required to process a page, nevertheless further research is needed to determine how exactly this can be achieved. Another vast area that requires experimental assessment is the evaluation of the various ways for improving web accessibility proposed in the different sets of guidelines. Last but not least, the needs of the web users with autism with regards to the textual component of the web are not yet explored.

So far we have scratched the surface of this problem by conducting a survey on the perceptions of web users with autism on the accessibility of online product and service reviews (Yaneva et al., under review). Our survey found that the participants with autism perceived significantly more barriers when reading online reviews, found their comprehensibility significantly more challenging and had significantly greater difficulty with inducing whether the author approves or disapproves of a given product. Based on open-ended questions, "language and presentation", as well as "ease of interpretation" were defined as the two main areas that need improvement. Exploring and evaluating natural language processing solutions to address these needs is another research area that is crucial for making the web accessible to all.

Acknowledgments

Our sincere gratitude goes to all volunteers who took part in our studies, as well as to Dr. Amanda Bloore for her help with proofreading this manuscript.

References

- S. Baron-Cohen, S. Wheelwright, R. Skinner, J. Martin, and E. Clubley. The autism-spectrum quotient (aq): Evidence from asperger syndrome/high-functioning autism, malesand females, scientists and mathematicians. Journal of autism and developmental disorders, 31(1):5-17, 2001.

- A.Bernas, A. P. Aldenkamp, and S. Zinger. Wavelet coherence-based classifier: a resting-state functional mri study on neurodynamics in adolescents with high-functioning autism. Computer methods and programs in biomedicine, 154:143-151, 2018.

- T. Britto and E. B. Pizzolato. TowardsWeb Accessibility Guidelines of Interaction and Interface Design for People with Autism Spectrum Disorder. In Proceedings of ACHI 2016: The Ninth International Conference on Advances in Computer-Human Interactions, 138{144}, 2016.

- A. Darejeh and D. Singh. A review on user interface design principles to increase software usability for users with less computer literacy. Journal of Computer Science 9 (11): 1443, 2013.

- M. Davidovitch, N. Levit-Binnun, D. Golan, and P. Manning-Courtney. Late diagnosis of autism spectrum disorder after initial negative assessment by a multidisciplinary team. Journal of Developmental & Behavioral Pediatrics, 36(4):227-234, 2015.

- A. Duchowski. Eye Tracking Methodology: Theory and Practice. Springer, second edition. 2009.

- C. Ehmke and S. Wilson. IdentifyingWeb Usability Problems from Eye-tracking Data. In Proceedings of the 21st British HCI Group Annual Conference on People and Computers: HCI... but not as we know it, Vol. 1 of BCS-HCI '07 University of Lancaster, United Kingdom, 119{128}. Swinton, UK: British Computer Society, 2007.

- S. Eraslan, V. Yaneva, Y. Yesilada, and S. Harper. Do Web Users with Autism Experience Barriers When Searching for Information Within Web Pages? In Proceedings of the 14th Web for All Conference on The Future of Accessible Work, W4A 17, Article 20, Perth, Australia, 2017.

- T. Falkmer, K. Anderson, M. Falkmer, and C. Horlin. Diagnostic procedures in autism spectrum disorders: a systematic literature review. European child & adolescent psychiatry, 22(6):329-340, 2013.

- M. G. Friedman and D. N. Bryen. Web accessibility design recommendations for people with cognitive disabilities. Technology and Disability 19 (4): 205{212}, 2007.

- U. Frith. Autism. Explaining the enigma. Blackwell Publishing, Oxford, UK, second edition, 2003.

- F. Happe and U. Frith. The weak coherence account: Detail focused cognitive style in autism spectrum disorder. Journal of Autism and Developmental Disorders, 36:5-25, 2006.

- O. I. Lovaas and L. Schreibman. Stimulus Overselectivity of Autistic Children in a Two Stimulus Situation. Behavior Research and Therapy, 9:305-310, 1971.

- C. Perez, D. MacMeekin, M. Falkmer, T. Tan. Holistic Approach for Sustainable Adaptable User Interfaces for People with Autism Spectrum Disorder. In Proceedings of the 2017 International World Wide Web Conference, pages 1553-1556, 2017.

- B. O. Ploog. Stimulus overselectivity four decades later: A review of the literature and its implications for current research in autism spectrum disorder. Journal of autism and developmental disorders, 40(11):1332-1349, 2010.

- L. Seeman and M. Cooper. "Cognitive Accessibility User Research." https://www.w3.org/TR/coga-user-research/#autism, 2015.

- S. Stajner, V. Yaneva, R. Mitkov, and S. P. Ponzetto. Effects of lexical properties on viewing time per word in autistic and neurotypical readers. In Proceedings of the 12th Workshop on Innovative Use of NLP for Building Educational Applications, pages 271-281, 2017.

- V. Yaneva, C. Orasan, L. A. Ha, N. Ponomareva and R. Mikov. (under review) Autism in the Digital Economy: Are Online Product Reviews Perceived as Accessible?

- V. Yaneva, I. Temnikova, and R. Mitkov. Accessible texts for autism: An eye-tracking study. In Proceedings of the 17th International ACM SIGACCESS Conference on Computers & Accessibility, pages 49-57. ACM, 2015.

- V. Yaneva, L. A. Ha, S. Eraslan, Y. Yesilada, and R. Mitkov. Detecting Autism Based on Eye-Tracking Data from Web Searching Tasks. Proceedings of the 18th Web for All Conference on The Internet of Accessible Things, W4A 2018, Lyon, France, 23-25 April, 2018.