Beyond Screen and Voice: Augmenting Aural Navigation with Screenless Access

Mikaylah Gross, School of Informatics and Computing, Department of Human-Centered Computing, Indiana University at IUPUI, mikgross@iupui.eduDavide Bolchini, School of Informatics and Computing, Department of Human-Centered Computing, Indiana University at IUPUI, dbolchin@iupui.edu

Abstract

The current interaction paradigm to access the mobile web forces people who are blind to hold out their phone at all times, thus increasing the risk for the device to fall or be robbed. Moreover, such continuous, two-handed interaction on a small screen hampers the ability of people who are blind to keep their hands free to control aiding devices (e.g., cane) or touch objects nearby, especially on-the-go. To investigate alternative paradigms, we are exploring and reifying strategies for "screenless access": a browsing approach that enables users to interact touch-free with aural navigation architectures using one-handed, in-air gestures recognized by an off-the-shelf armband. In this article, we summarize key highlights from an exploratory study with ten participants who are blind or visually impaired who experienced our screenless access prototype. We observed proficient navigation performance after basic training, users conceptual fit with a screen-free paradigm, and low levels of cognitive load, notwithstanding the errors and limits of the design and system proposed. The full paper appeared in W4A2018 [1].Introduction and Background

Whereas a blind person often travels with some form of adapted travel aid held in one hand (e.g., cane, a human companion or guide dog), mobile screen-reading tools force users to keep the phone in their hands at all times and slide fingers to manipulate a small control screen.

Because such screen-centric navigation requires a narrow range of motion and a fine-tuned interaction on the screen, users end up restricting phone use entirely to moments where they stand still. These moments halt travel altogether to perform quick searches, send a text, check the weather, locate the next bus transfer, or update social media.

The constant reliance on manipulating a screen surface may cause additional practical problems. For example, to avoid using earphones at all times, people who are blind or visually impaired often hold their phone close to their ears while having to slide fingers on the screen. In this type of scenario, for the volume to be heard directly and discreetly, the phone has to be held backward, with the speaker resting closer to the ear. As a result, users experience difficulty in mapping the direction of the gesture to the direction of navigation across objects on the screen. Existing research acknowledges these type of problems: having an extensive breadth of gestures required to interact with a small screen on a smartphone alongside a lack of tactile feedback may result in decreased efficiency and accuracy when navigating the screen or typing [2-4].

Alternative input solutions, such as voice-based dictation, are becoming available to reduce unwanted friction on a mobile screen, but at the cost of breaking security, social and privacy boundaries. For example, voice interactions are problematic in noisy environments, in public, or in quiet settings (e.g., voice-searching on the bus, dictating a passphrase in a shop, or in a library) [5-7]. Moreover, because of the difficulties in perceiving how others notice them, people who are blind or visually impaired often feel like they stand out in public spaces, and thus avoid noisy, attention-seeking input modes like voice [5].

Navigating through Screenless Access

We envision screenless access as a paradigm in which users can fluidly navigate aural information architectures on-the-go through some form of touch-free, one-handed gestural input, with the advantage of bypassing the requirement of touching or talking to a mobile screen.

As a first attempt to start demonstrating what screenless access could bring to the user experience, we reified our vision through AudioSword: a system that uses the Myo off-the-shelf armband Bluetooth-connected to a custom-designed mobile application [8].

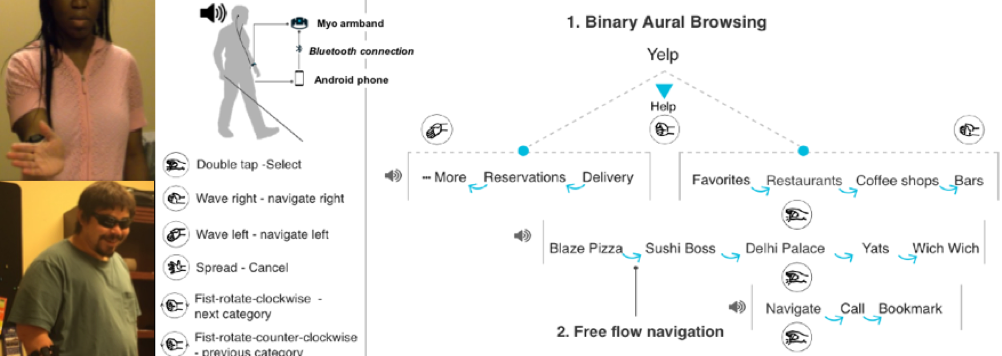

Our approach features three main navigation components (see Figure 1):

Binary Aural Browsing. Mobile screen readers read aloud menu items sequentially in the order in which they appear on the screen. To enable faster access to topics of interest, we introduced Binary Aural Browsing at the top-level menu, where there is much heterogeneity of content items and functions: menu items are "split" into two sets based on topical, usage or user-defined custom criteria. The default access to the menu is the midpoint between two sets, with the opportunity to navigate right or left to listen to the available options in TTS. This "dichotomic" approach to aural browsing allows users to manage long menus through a spatial anchoring of items that can help recall over repeated usage.

Free Flows. Besides supporting the manual scanning of menus, to reduce mechanical interaction steps and mental workload, items in a menu are played by default following an "auto playlist" approach, analog to the notion of aural flow used in sighted contexts [9]. To minimize time and mental workload, once the user selects a menu, the system starts reading out the items in the menu sequentially. These sort of ballistic navigation actions performed by the system can move the user closer to the point of interest with minimal effort. Once the user identifies a point of interest, the user stops the free flow and performs more local navigation to select the point of interest.

One-handed Gestures. To control aural menus, we used the Myo armband connected through Bluetooth to an Android phone to recognize six hand/finger gestures for input: wave right, wave left, finger spread, double tap, fist rotate clockwise, and fist rotate counterclockwise. These nimble gestures map to appropriate actions over the navigation menus (Figure 1). Wave right/left are used to move focus forward and backward in a menu. Double tap selects the item in focus; Finger spread acts move the focus one step up in the navigation hierarchy. The fist rotate clockwise action is similar to open a door using a rounded doorknob. When users rotate their fist, the focus shifts to the next item in the same level of the navigation hierarchy. Performing fist rotate counterclockwise moves the focus to the previous item.

Methods

Procedure

Over a period of six months, we conducted an IRB-approved study in collaboration with participants and trainers working at a major workforce development program in the Midwest for people who are blind or visually impaired. The study was divided into six distinct sections that took approximately two hours to complete.

Introduction and Consent. Participants were welcomed and introduced to the purpose of the study, the duration, and compensation for their time. Informed consents were signed before proceeding to the pre-task questions.

Pre-Task Questions. Semi-structured interview questions gathered participant's demographics and gauged any prior experiences with accessible applications.

Training. Participants had an opportunity to learn and practice the gestures with and without the armband, along with an introduction to the concepts used throughout screenless access (e.g., binary aural browsing, free flow navigation).

Navigational Tasks with Post-Task Questions. Participants completed a series of tasks, detailed in the subsequent section, followed by post-task questions using the System Usability Scale (SUS) and NASA Task Load Index (NASA-TLX).

Post-Test Semi-Structure Interview. A final semi-structured interview solicited feedback about participant's overall impressions and experiences with screenless access.

Open Discussion. Participants had an opportunity to discuss advantages, disadvantages, and suggestions for improvements in future iterations of screenless access.

Tasks

In the central portion of the study, the participants performed a series of five tasks that progressed in difficulty, beginning with opening a phone application and finishing with navigation through several layers of the aural information architecture. The five tasks are as follows:

- Find and select the Yelp application.

- (a) Within the Yelp application, could you find and select Restaurants? (b) You will listen to the list of restaurants being called out in a sequence. Could you should me how you would stop this and browse manually?

- Find and select the restaurant called Delhi Palace.

- Find and select the restaurant called Yats. Then, move to browse through Coffee Shops.

- Find and the coffee shop called Mojo Coffee House. Then, listen to the walking directions.

Participants

| ID | Age | Gender | Handedness | Level of Vision | Duration of Current Level | Phone OS | Phone Usage | Education |

|---|---|---|---|---|---|---|---|---|

| P1 | 34 | F | Right | Light perception | Birth | iOS | 7 years | Bachelors |

| P2 | 26 | M | Right | Light perception | 13 years | iOS | 5 years | Bachelors |

| P3 | 29 | M | Right | Light perception | 18 years | iOS | 3 years | Bachelors |

| P4 | 61 | M | Right | Total blindness | 18 years | iOS | 4 years | Bachelors |

| P5 | 48 | M | Right | Total blindness | Birth | Android | 9 years | High school |

| P6 | 31 | M | Right | Total blindness | 6 years | iOS | 7 years | Some college |

| P7 | 47 | F | Right | Total blindness | Infancy | iOS | 4 years | Bachelors |

| P8 | 33 | F | Right | Total blindness | 10 years | iOS | 1 year | Associate |

| P9 | 33 | F | Right | Light perception | Birth | iOS | 5 years | Masters |

| P10 | 31 | M | Right | Light perception | 15 years | iOS | 5 years | Masters |

Ten participants (four females and six males) took part in the study. For their level of vision, five participants indicated having total blindness, while the remaining five had various levels of light perception. Four participants shared that they have been blind since birth and six had become blind starting at 11 up to age 43. Participants had between one and nine years of smartphone experience, primarily with iOS. A synopsis of the de-identified demographics is shown in Table 1.

Results Synopsis

At the conclusion of the study, we were able to gather both quantitative and qualitative results regarding participants' performance and experiences with screenless access. We were unable to use the data from our 10th participant in our results due to video recording difficulties.

Styles to Screenless Navigation

Throughout our study, we identified two distinct approaches to screenless navigation: autonomous and step-by-step.

Autonomous. Participants who exhibited an autonomous form moved through the architecture quickly and required little to no assistance from the moderator. They were able to recognize errors when they occurred and recover independently. Across all task instances, the autonomous form occurred 69% of the time. In some instances, when autonomy reached an optimal point, participants would perform gestures in different adaptions of hand positions and orientations, which we defined as microvariations. For example, a participant may perform the wave right gesture as a quick wrist snap as opposed to the full extension of the hand to the right in order to save time and physical effort.

Step-by-step. The other navigational style was step-by-step, which occurred in 22% of all task instances. Here, participants moved through the aural architecture with caution and retraced exact steps learned in training with little to no variation in gesture performance. In addition, they relied on aural and vibrotactile feedback before proceeding to the next gesture. If a system error occurred, these participants moved even more cautiously through the menu to avoid further errors.

The remaining 9% of task instances had a mixture of both autonomous and step-by-step characteristics exhibited.

Free Flow Interactions

Additionally, we observed participants displaying clear preferences when interacting with the free flow.

Select First. In the first preference, participants would stop the free flow within one item of the menu starting. Then, they would take control of the free flow functionality and browse the menu manually to gain their orientation. This style occurred in 89% of task instances.

Aural Overview then Select. The second preference occurred in 11% of all tasks instances. Participants listened to the free flow audio to gain orientation within the architecture. After some time or after several items had passed, the participant stopped the free flow using the wave left/right gesture to begin browsing manually.

Aural Navigation Performance

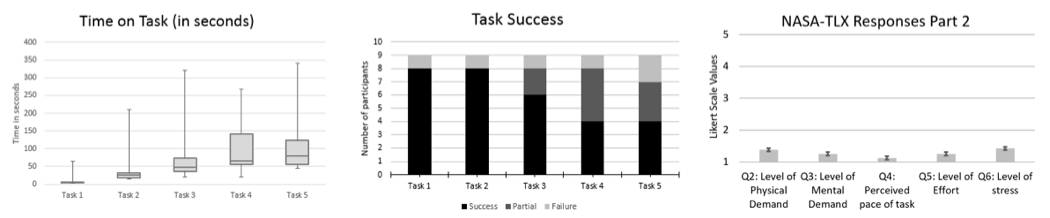

For aural navigation performance, we analyzed the findings for errors, task success, and average error rate.

System Errors. System errors were defined when the system performed an incorrect action that affected the user's performance, shown in more detail in Figure 2. Missed recognition comprised of 55% of all system errors identified. This error occurred when the system would not recognize the gesture the participant performed. Missed recognition differs from Incorrect recognition (22%), the second most common system error, where the participant would perform the correct gesture; however, the system would map it to another gesture. When system errors occurred, some participant would use microvariations to increase accuracy and recognition. For example, several participants chose to create adaptations to the double tap gesture to explore if alternative orientations or variations would produce better results.

Design Errors. Conversely, design errors were defined as a mismatch between our design choices and how the participant interacted with the system. More than 50% of all design errors involved a Gesture being performed incorrectly. In one particular individual's study, she would perform the wave right with the fingers spread open, which was recognized as a finger spread gesture rather than its intended action. Gesture performed incorrectly, once again, differs from Incorrect recognition because the participant would not perform the gesture as instructed, and the system could not correctly identify the gesture.

Task Success. We measured task success for all participants across all tasks based on following criteria: If the user did not have any design errors, it was labeled as a successful task (1 points); if the user had 50% or fewer design errors in comparison to the total number of gestures, it was labeled as a partial success (0.5 points); if the user had 50% of more design errors in comparison to the total number of gestures, did not complete the task, or surpassed three attempts, it was labeled as a failed task (0 points). The six tasks that failed were the result of a mixture of design errors, system errors, incorrect microvariations, gesture substitution, and the inability to complete a task within three attempts. Participants spent on average between 30 seconds and 2 minutes on Tasks 2 through 5.

Overall Responses to Screenless Access

Overall, self-reported responses to the system usability scale ranked screenless access at an 83.9, well above the conventional 68. In addition to our quantitative data, we conducted a qualitative analysis of participant's responses to their overall experience. Three high-level themes arose from our data:

Convenient. The participants enjoyed that the system could be used one-handed without the use of the physical phone screen. For example, P1 noted that when you’re already fumbling with your cane, the last thing you want to do is fumble with your phone.

Systematic. The system allowed for the linearization of navigation across the pages. As indicated by P2, compared to [using] the phone, for instance, there's a lot of jumping around on the screen, and there’s a lot of [pages] loading. It [current TTS software] jumps to the top of the screen, and you've lost your position, and you have to relocate...[Screenless access] has a very systematic list of items and it cycles through. There is a specific way to stop the cycling and to select something.

Private. Because the phone could be kept in the participant's pocket, there was an added layer of security to both the phone's hardware and its contents. For example, P4 discussed the problems that arise from having the phone available in plain sight to be stolen, while P1 noted the people would no longer be able to determine what she was trying to access on her phone if she were to use the in-air gestures of screenless access.

Limits and Opportunities for Gestures

Several participants also talked about the limitations and opportunities for gestured using in screenless browsing.

Social perception. To begin, people expressed having sensitivities to performing gestures in public, where it may be unclear to others what the user is doing. P4 spoke twice to this, stating the only disadvantage I could see is if it didn't get the gestures right and you could be standing around waiting in line, and people could think 'what is this [person] doing?' Much more discrete gestures would be better.

Physical strain. Although measured as low on our NASA-TLX, physical strain was still mentioned during the open discussion. This method did bring more physical strain to arms, hands, and fingers than participants were accustomed to, indicated by P2. Some of the gestures could have been simpler gestures...something that doesn't require you to stress your fingers as much. That's a lot more taxing than swiping on the screen [P2].

Finger dexterity. Finger dexterity was discussed for both its limitations and its opportunities. P2, a trainer in accessible technologies, believed that screenless access might not benefit individuals who lack the dexterity to perform stringent in-air gestures; however, it could be a solution for individuals who cannot perform fine-tuned gestures on a smaller surface.

Potential Use Cases

Lastly, we pulled out additional use cases that participants shared would be possible when using screenless access.

Touch-free interaction. This new style of interaction would allow for a touch-free interaction, bypassing the physical fraction of on-screen gestures. Participants enjoyed exploring this experience, with two participants specifically mentioning how they would no longer need to take out their phone to interact with it.

Time saving. Screenless access would provide the ability to finish multiple tasks simultaneously, thus saving time for the participant. For example, P7 shared that as a mother, anytime I am on the go, or sometimes even at home when I have children... it [screenless access] would allow you to do multiple things at once.

Traveling. Individuals using screenless access could travel from place to place more efficiently. Information about routes and arrival times could be searched while traversing, riding in a car, or waiting at the bus stop, all while keeping the phone in their pocket.

Discussion

Overall, participants expressed having a positive experience when using screenless access. The system, although only a prototype, indicated that there is a potential for screenless access to enhance the navigational browsing experience, while removing the constant tethering to on-screen gesture or voice-based dictation. Every participant provided feedback and connected the use of screenless access to one or more use cases.

We also observed that although the system was a prototype, it was able to accommodate to the microvariations of in-air gestures during the study. Participants demonstrated that performing these microvariations could improve speed, accuracy, recognition, and the amount of physical effort exerted while performing the gestures. It was discussed that some of these could be used for making the gesture more discrete.

Finally, the area of privacy was of interest to us throughout our results because screenless access provides an added layer of privacy and security to an individual's hardware and software. This layer of privacy and security is something that currently cannot be done efficiently when using on-screen gestures or text-to-speech. Participants appreciated this because for the first time, they can have full control of who can and cannot have access to their information. However, there could be difficulties with privacy for the individual themselves. If people are unware of what the participant is doing, this could make them a target for unwanted attention.

This study had three main limitations: the system was a prototype and thus, the participant's performance was affected by system errors; the gesture vocabulary was not discrete nor accommodating to the participants' needs and desires; the sample size was limited in both size and location.

Ongoing Research and Future Work

Based on these encouraging results, we are continuing to explore the most efficient navigation strategies and quiet and discreet input modalities that can enable the realization of screenless access for people who are blind. With the increasing availability of arm-worn and hand-worn gestural input devices, we see screenless success as an emerging paradigm that can be fruitfully explored to augment the aural browsing of mobile content and services.

Acknowledgments

This research material is based on work supported by the NSF Grant #1018054, and a Google Faculty Research Award. Any opinions, findings and conclusions or recommendations expressed in this material are those of the authors and do not necessarily reflect those of the NSF or other entities. We would also like to thank Imran Ahmed, James Michaels, Bill Powell, Dhanashree Bhat, Shilpa Pachhapurkar, Souyma Dash, Joe Dara, and Christopher Meyer for their assistance and work throughout this project.

References

- SGross, M., Dara, J., Meyer, C., and Bolchini, D., Exploring Aural Navigation by Screenless Access, In Proceedings of the 15th International Cross Disciplinary Conference on Web Accessibility (W4A) (W4A '18). ACM, New York, NY, USA (accepted).

- Oh, U.e.a. Investigating Microinteractions for People with Visual Impairments and the Potential Role of On-Body Interaction. in Proc. of the 19th Int. ACM SIGACCESS Conference on Computers and Accessibility. 2017. p. 22-31.

- Abdolrahmani, A., R. Kuber, and A. Hurst, An empirical investigation of the situationally-induced impairments experienced by blind mobile device users, in Proc. of the 13th Web for All Conference. 2016, p. 1-8.

- Mingzhe Li, M.F., and K. Truong. BrailleSketch: A Gesture-based Text Input Method for People with Visual Impairments, in Proc. of the 19th Int. ACM SIGACCESS Conference on Computers and Accessibility. 2017.

- Ahmed, T., et al., Privacy Concerns and Behaviors of People with Visual Impairments, in Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems. 2015, ACM: Seoul, Republic of Korea. p. 3523-3532.

- Shinohara, K. and J.O. Wobbrock, In the shadow of misperception: assistive technology use and social interactions, in Proceedings of the SIGCHI Conference on Human Factors in Computing Systems. p. 705-714.

- Shinohara, K. and J.O. Wobbrock, Self-Conscious or Self-Confident? A Diary Study Conceptualizing the Social Accessibility of Assistive Technology. ACM Trans. Access. Comput., 2016. 8(2): p. 1-31.

- Myo. [cited 2016; Available from: https://www.myo.com/]

- Rohani Ghahari, R., J. George-Palilonis, and D. Bolchini, Mobile Web Browsing with Aural Flows: An Exploratory Study. International Journal of Human-Computer Interaction, 2013. 29(11): p. 717-742.